In this post we respond to the business leaders, faculty, students, and friends that have asked us to help them stay up to date and make sense of the changes, opportunities, and challenges surrounding the new AI. Today we highlight the frenzy of changes that came in March to AI text and image generators such as ChatGPT and Midjourney.

March was a month that will likely go down in the history of AI as an inflection point for the following 4 reasons:

- These technologies were shown to significantly improve in both scope and quality

- These technologies are starting to be embedded in industrial applications

- This is happening for both text and image generators

- Concern around the actual and potential misuse of these systems is growing.

ChatGPT…

…Is improved

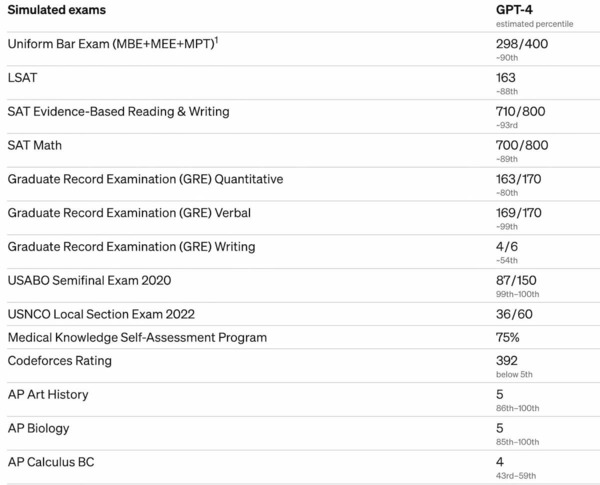

On March 14, OpenAI, the company that created ChatGPT, launched a new version of its underlying technology called GPT-4. This version shows significant improvements in ChatGPT’s responses to prompts as well as in its avoidance of inappropriate or silly responses. While AI companies often publish results of system performance in terms of standard research benchmarks, OpenAI turned the page by reporting how well the system did on commonly used human exams: It scored in the 90th percentile on the Bar exam, the 86th percentile on the LSAT, and kept the label of "not so good at math" by scoring around the 50th percentile for AP Calculus though that was a dramatic increase from about the 3rd percentile in the previous version. A percentile indicates the percentage of people that the system performed better than.

Despite Microsoft (which uses GPT-4 in Bing) trying to convince us that errors are “usefully wrong”, users should still proceed with caution as it can still generate inaccurate text and hallucinate non-true text. In ChatGPT, part of the improvement comes in the control of the user interaction which now will tell you that it doesn’t hallucinate when you tell it to stop hallucinating. If you include a prompt that looks like a class assignment, it will tell you it is not supposed to complete your assignment. The free version of ChatGPT still uses the older GPT3 while the subscription version uses the latest technology.

The system seems to be changing almost daily, and its users are well advised to consider each session an experiment until its behavior is confirmed.

…Does more

GPT-4 is also a multi-modal model, meaning it can process image and text inputs, while continuing to generate outputs as text (though the text can be computer code that generates images!). OpenAI claims that the model is more creative and collaborative than ever before and can solve difficult problems with greater accuracy. Examples abound on the internet of GPT-4 doing things GPT-3 could not do very well and Microsoft researchers published a massive 150 page research report outlining its improvement over the last months across a number of areas.

…A lot More

In a demonstration given to developers, ChatGPT was shown the outline of a website drawn on a piece of paper and asked to provide the computer code necessary to bring the website to life, a task it successfully completed.. While GPT-3 and similar models were known for having capabilities to write code for small and specific tasks, this demo shows GPT-4 is a big leap for coding assistance. Nevertheless those features are not fully released and a demo is always a marketing exercise, not a research paper.

…Is entering products

On the same day as OpenAI’s GPT-4 announcement, OpenAI announced six partners that integrated GPT-4 into new services. These organizations span a wide range of industries including Khan Academy and Duolingo in Education, Morgan Stanley and Stripe in Finance, Be My Eyes in Healthcare, and even the Government of Iceland. Shortly after these announcements on March 23, OpenAI announced that they have begun supporting plugins in ChatGPT to a small set of users, with plans to gradually roll out access on a larger scale. Plugins allow ChatGPT to reach out to other computers and access specific data needed by ChatGPT to access unique and timely information. For example, if you want help with making travel arrangements, a Plugin could reach out to an airline flight database to feed ChatGPT information that is needed for that task. This will greatly accelerate the prospect of ChatGPT being useful for accomplishing tasks based on recent or real time data.

OpenAI also announced the availability of commercial access to the computing power of their AI systems from other organization’s computers via Application Program Interfaces or APIs. These connections are essentially reverse Plugins that allow computer systems to feed data and requests to OpenAI’s computers and retrieve the answers automatically. This will lead to a cottage industry of software developers accessing GPT functionality from their own software applications. A heavy investor in OpenAI, Microsoft announced a suite of product integrations built around the very strong coding skills of ChatGPT in an approach called Copilot.

Google and Microsoft Are updating Search

At the time of the GPT-4 rollout, Microsoft announced that it had already been using GPT-4 in its updated Bing product. Given some of the unusual interaction individuals have had with that system, this seemed to be a double-edged admission.

Not to be completely left out, a week after the Open-AI released GPT-4, Google released its Bard chat plus search system. We’ve not seen too much enthusiasm for Bard with lots of reports of strange behavior and disconnect with expectations. One author summed it up like this: ” ChatGPT is the most verbally dextrous, Bing is best for getting information from the web, and Bard is... doing its best. (It’s genuinely quite surprising how limited Google’s chatbot is compared to the other two.)”

Lots of Actions with Image Generation System as Well

While the text world was reeling from so much new AI in just a week(!), so was the world of image generation. The day after ChatGPT was upgraded with GPT4, Midjourney released version 5 of their AI image generation service. Like other image synthesizers, Midjourney generates images from prompts using an AI model trained from images scraped from internet websites and open databases. The two most striking aspects of this new release over prior versions is dramatically better photorealism and dramatically better rendering of humans who had previously been marred with enough fingers and and other distortions to earn roles on Star Trek. The progress can be seen at a number of web pages that render prompts across the variety of versions, such as this page from Ars Technica.

The Pope gets involved

As any junior-high jokester would have predicted, it only took 5 days until someone used it to generate images that look like photographs of Donald Trump getting arrested. Such natural looking pictures were not possible with version 4. Images generated of the “Pope in Puffer” were not far behind. Le Monde did a good job pointing out how to see where the system does a poor job (continue to watch fingers and other details).

At the same time (March 21), Microsoft launched an image creator in Bing called…Bing Image Creator. This system is based on a version of OpenAI’s DALL*E image generation system. While for the last year I’ve always preferred the rendering of Midjourney to DALL*E, Bing will clearly win the usability war. I cannot recommend Midjourney to most users because it requires the Discord chat application, and some background knowledge about the interface. However, Bing is very easy to use, provides good examples and helps with image management. The only (minor) drawback is that it requires the Microsoft Edge browser and a free account.

And if that was not enough for a week of change, also on March 21, Adobe released Firefly, a family of creative generative AI models that are embedded in Adobe tools to bring generative AI directly into customers' Adobe workflows. Generative Image AI is no longer for the few.

Public Concern is Growing

If you’re not sure how to feel about these advancements, know that you’re not alone. On March 22, the Future of Life Institute published a letter, now signed by more than 50,000 individuals including prominent AI scientists and industrial figures such as Elon Musk, calling for a six-month pause on the development of systems that exceed GPT-4. In the interim, they urge developers to collaborate with one another and with policymakers to establish a set of safety protocols to be implemented in advanced AI design and development. The letter also calls for a shift in focus of AI research and development towards enhancing the accuracy, safety, interpretability, transparency, robustness, alignment, trustworthiness, and loyalty of these systems.

While it is hard to imagine such a suspension, research regarding likely impacts continues. Researchers at OpenAI and the University of Pennsylvania predict that generative AI could affect approximately 10% of work tasks for eight out of ten US workers, while half of the work tasks for one out of five US workers could be impacted. Specifically, professions involving programming and writing are expected to be more susceptible to automation, while those requiring scientific and critical thinking skills may be less vulnerable. To mitigate the potential impact of AI on job security, experts recommend that workers focus on developing and strengthening their soft skills such as communication, creativity, and emotional intelligence. This is a bit of a simplification as those skills have always distinguished high functioning employees from others. What is clear is that we will always need individuals who can understand and articulate appropriate goals, set direction and evaluate work and determine the best use of AI or other tools. Computer spreadsheets did not replace accountants, it just shifted their work from arithmetic to strategy and consulting. We' will be writing more about this in the near future.

As the month drew to a close the UK government published a “world leading approach to innovation in first artificial intelligence white paper to turbocharge growth” titled “A pro-innovation approach to AI regulation”. Finally, on the last day of March, Italy placed a temporary ban on the use of ChatGPT due to concerns over privacy violations, after it was reported that the interface was revealing the prompts and interactions of other users. Accordingly, OpenAI is currently under investigation by Italian authorities for potential violations of the European Union's General Data Protection Regulation (GDPR). This regulation aims to safeguard the privacy and data rights of EU citizens, making it a significant legal issue for OpenAI and other AI-based companies operating in the region.

Takeaways

It is hard to believe that the many releases, advancements, and mistakes described above happened in the roughly two weeks between March 14th and March 31st. Quite remarkable. What are the takeaways that we need to have in mind as we go forward?

1) The quality of these tools is advancing quickly.

2) There's an arms race between the different companies that's going to push the advancement.

3) The arms race is leading to a situation where technologies are released while they are still a work in progress.

4) There's not enough time for people to digest either the operational issues or the social issues.

5) The behavior of the systems is still partially unpredictable so experimentation and caution are always required

6) Mythology and misperception abound.

Part of the problem, and also the great advantage, is that these systems generate text that looks so human we let our guard down. But it is essential to remember that these are not thinking machines. These are text generation machines that are sometimes right and sometimes wrong. That's okay for some things like a fictional story, but not okay for other contexts such as privacy and security.

And there's a lot we didn't say here. For instance, the automated generation of computer code is already changing how many software developers do their work, and the quality of the image generation is already changing how marketing and communications are done. There will be a lot to unpack here for a long time. These technical changes have economic, social, political, artistic, and other types of consequences. Here at Notre Dame, we think every discipline in the liberal arts will both be impacted, and have an important point of view to contribute to the dialogue about how this all unfolds. Keep experimenting and be careful not to trust the technologies too deeply.

This post was written in collaboration with the New AI research team: Sydney Colgan, Rachel Lee, & Grace Hatfield. If you have questions or suggestions, you can email John at jbehrens@nd.edu.